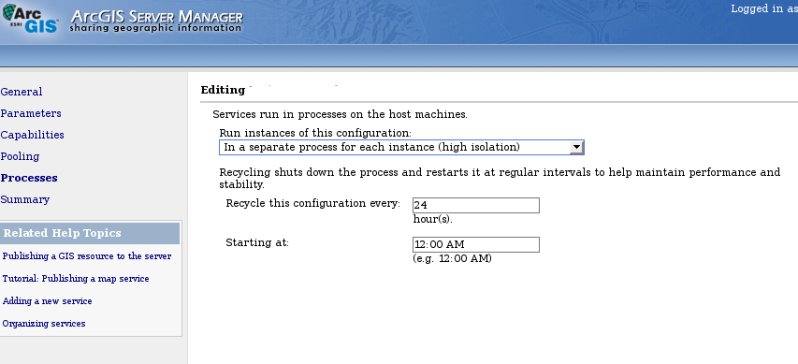

So I’ve rarely looked at ESRI software, but a friend has administrator rights on the new version of ArcGIS Server, and he decided to show me how it works. There are a lot of options, and they provide some interesting configuration of how the processes work:

They have this great ‘feature’ called Recycling – ‘Recycling shuts down the process and restarts it at regular intervals to help maintain performance and stability’.

Words escape me.

Ok, not really (when have they ever?), since I want to explain this to people who don’t write software. This ‘feature’, to me, is their development team admitting defeat. They couldn’t iron out all the memory leaks and little code errors that might get in the way of a well performing, stable, robust server. When you mess up your program in that way about the only thing that can fix it is to completely restart the whole thing. So what this ‘feature’ does is make it so your server automatically restarts itself at the interval you set, the most blunt possible approach to the problem there is.

Now I’m not saying we’ve never had memory leaks in GeoServer, on the contrary. Indeed we had to delay 1.6.0 and do another release candidate because we found one that only built up over a long time when users were requesting really large maps. All I’m saying is that to add something like this is to admit defeat, to say that they don’t even necessarily plan to fix all the little bugs that are causing this. And more than anything I just love that the double speak, playing it as a feature, to ‘help maintain performance and stability’. Say what you will about their software, they remain absolutely brilliant marketers, and I have a ton to learn from them in that realm.

Wow… I wonder if they will now let you “recycle” your maintenance contract into a nice refund check!

ArcGIS Server allows you to work with long-running custom code of your own creation. They’re not necessarily admitting they’re at fault here; it could be the user’s code that’s unstable. It seems hardly different than the “Recovery” tab for Windows Services.

I like the guys in Redlands, but yes, it sounds like they’re barbers who still use leeches for bloodletting to improve your health.

Another way to look at it is that this is fixing the problem twice. It doesn’t mean they should ignore problems, but you can fix leaks in the code *and* guard against leaks (or whatever other problem) in the deployment. People who deploy their server have no interest in whatever leaks occur — they can’t fix them, they don’t care to diagnose them, and they probably don’t really care if they exist as long as they don’t effect their deployments.

Apache has done this in their server just about forever (the maximum requests setting for worker processes, which defaults to some large but-not-that-large number). Practical solutions like this is part of what makes Apache so reliable.

Huh. Ok, if this really is a feature people desire I’d be happy to try to get it implemented in GeoServer. I do feel like for user’s code they could program some isolation so user code can’t just take over processes and make systems unstable. I’m not sure that Apache’s max settings for worker processes is really the same thing, limiting the number of processes seems a bit different than forcing a hard restart.

Anyone that has done serious ASP.NET development knows that even Microsoft has this feature. As a matter of fact, if the ASP.NET process exceds a given threashold on resources, it will automagically restart.

The dev team at ESRI know what they are doing here and it is no different than any extensible server out there today from Microsoft or whoever.

Chris, the Apache thing is not limiting the number of processes, but the number of requests a process (used as a module or fastcgi, don’t know the details) is going to serve. After that, the process is killed and re-created. Afaik PHP is set by default to 200, if you go much more beyond that memory leaks become apparent.

Doing this in GeoServer is not exactly easy btw, we would have to do it at the web container level to actually make sure we throw away every possible memory leak.

These problems permeate the whole ESRI product line. It’s not just memory leaks in the underlying ArcObjects code (which, after all, is at its heart 10-year-old technology: unmanaged COM).

Most ESRI products (dating way back to MOIMS) seem to have trouble maintaining persistent connections across a network, like to an ArcSDE server. Usually, these connections consume resources on the SDE server and are pretty fragile in terms of staying viable through multiple TCP timeout cycles or other network voodoo. The result is that one end of the connection (either client or server) gets disconnected and the other end doesn’t know it. Usually, the only way to everything to rights is to periodically restart the client (client meaning e.g. ArcIMS or ArcGIS Server). Besides the frequent restarts, all manner of hackery have been suggested as remedies to this problem, including scheduling a map request at intervals to keep the network connections open.

But, of course, the only real solution is to not depend on a connection between two ESRI clients.